In the span of about 3 days, I successfully migrated my blog to use Hugo as my backend. Hugo (no, not the 2011 movie) is a static site generator that allows you to write Markdown files, and then creates HTML files from them. As you might guess from that blurb, Hugo itself is not hosting the content, but rather adapting the content to a web friendly format.

Reasons

There are a few major reasons for why I jumped to Hugo from my WordPress-based website, which can be summarized to 4 key points: speed, backups, flexibility and freedom, and potential liability.

Faster

Quite frankly, I don’t use a fraction of the features that WordPress offers. My blog has no shop associated with it, comments have been disabled for a long time, and I was barely using any plugins with it. And while WordPress is feature-rich, it comes with the consequence of being a heavy weight in system load, especially given that I’m hosting on a Nanode from Linode with 1 core and 1 GB of RAM.

In addition to the user-facing performance benefits, there’s also the admin experience. For me to log in, draft up a post, and publish it, the process was too convoluted for someone who is a one-man army. If I logged in from a different location or even a different tab, who knows what version I was getting or what version would be saved. This further contributed to the writers blocks, where I’d have to remember where I was and what I wanted to write about.

Backups

Because everything is a Markdown file, everything can be stored in a git repo. While having the entirety of my website in a git repo has additional benefits that will be outlined later, the ability to utilize the robustness of git means that I get baked in revisions and I can store it wherever I want.

Freedom and Flexibility

WordPress runs on top of a LAMP, or Linux, Apache, MySQL, and PHP stack. Having these four distinct, components comes with benefits to high availability, load balancing and manageability, among other things. The double-edged sword of that last point, the manageability, is how difficult it is to manage. For a simple website, it’s not uncommon for all four components to run on the same server, often with low system resources available. That last detail means it’s easy for one component to accidentally kill another. While I have uptime monitoring through Uptime Robot and Uptime Kuma, there were multiple situations where there was partial functionality or the only working page would be the index page, resulting in false positives.

Further, since these files are pregenerated HTML, CSS, JavaScript, and pictures, more flexibility is offered with where I store it, how I serve it, or where I serve it. I may presently be using Apache2 on a 1GB Nanode to serve and store the files, but there is nothing stopping me from storing the files on a Backblaze B2 bucket, served using Caddy on a Digital Ocean droplet, and the complete change would be transparent to the end user.

The flexibility offered in serving statically generated HTML files means that I was able to add pages that deviated from the standardized template, with the very first example being of [a resume page][https://monicarose.tech/resume], permitting me to consistently keep an up-to-date and easy to modify resume in the future. That, combined with me already using Markdown-based files for my notes in class means that it’s quick for me to draft up a professional appearing post.

Automattic/WP Engine Drama

I had been looking at migrating my blog to something that allowed me to write Markdown-based content, with some naive implementations of on-the-fly Markdown to HTML conversion, but never got the results that I was looking for. However, when the WordPress Foundation changed their trademark policy on how you could use the terms “WP” or “WordPress”, specifically calling out WP Engine as an example of infringement, using any form of WordPress felt like a liability, being the driving force that pushed me to migrate away.

Prep

To prepare for migrating, I set up a git repo on my personal Gitea instance to provide a place to push my content to. After an initial commit, I set up a container on my Proxmox server and set it up so it mirrored my public-facing web server, substituting the LAMP stack for Apache and Hugo. Further, after doing some quick research, I found that Drone was a commonly used CI/CD pipeline with Gitea.

After deploying Drone and a basic pipeline, including one of the many Hugo plugins for Done and an rsync plugin for Drone, I refined the pipeline as much as I could until I got successful deployment. This allowed me to focus more on migrating the content and learning how to integrate static content like images. To migrate the content, I discovered wp2hugo, a tool written in Go that takes your WordPress export and adapts them to Markdown files. Committing these, I finally had a baseline to push to production.

Deploying to Production

To deploy to production, I knew there was one major obstacle I’d have to overcome. In addition to an iptables based firewall on the VM itself, I additionally am utilizing Linode’s firewall rules. As my Drone instance is deployed in my homelab, I had to work around the issues concerning a dynamic IP. As Linode’s firewall, to the best of my knowledge, doesn’t allow me to do a reverse DNS lookup for a rule, I wrote a simple Python script and a docker container that will update the firewall. By no means is it perfect, but should be good enough for a basic implementation.

After confirming that script worked, I added the SSH key for the CI/CD pipeline, modified the permissions so that elevating to root wouldn’t be necessary, and backed up the WordPress website just in case issues occurred. Predictably, issues did occur, particularly around permissions.

While the website shouldn’t have any server-side executable code on it, it’s still good practice to limit permissions as much as possible. Now with that in mind, let me provide this script from my CI/CD pipeline, and tell me if you see any issues here:

chown -R mhanson:www-data /var/www/monicarose.tech/

chmod -R 640 /var/www/monicarose.tech/

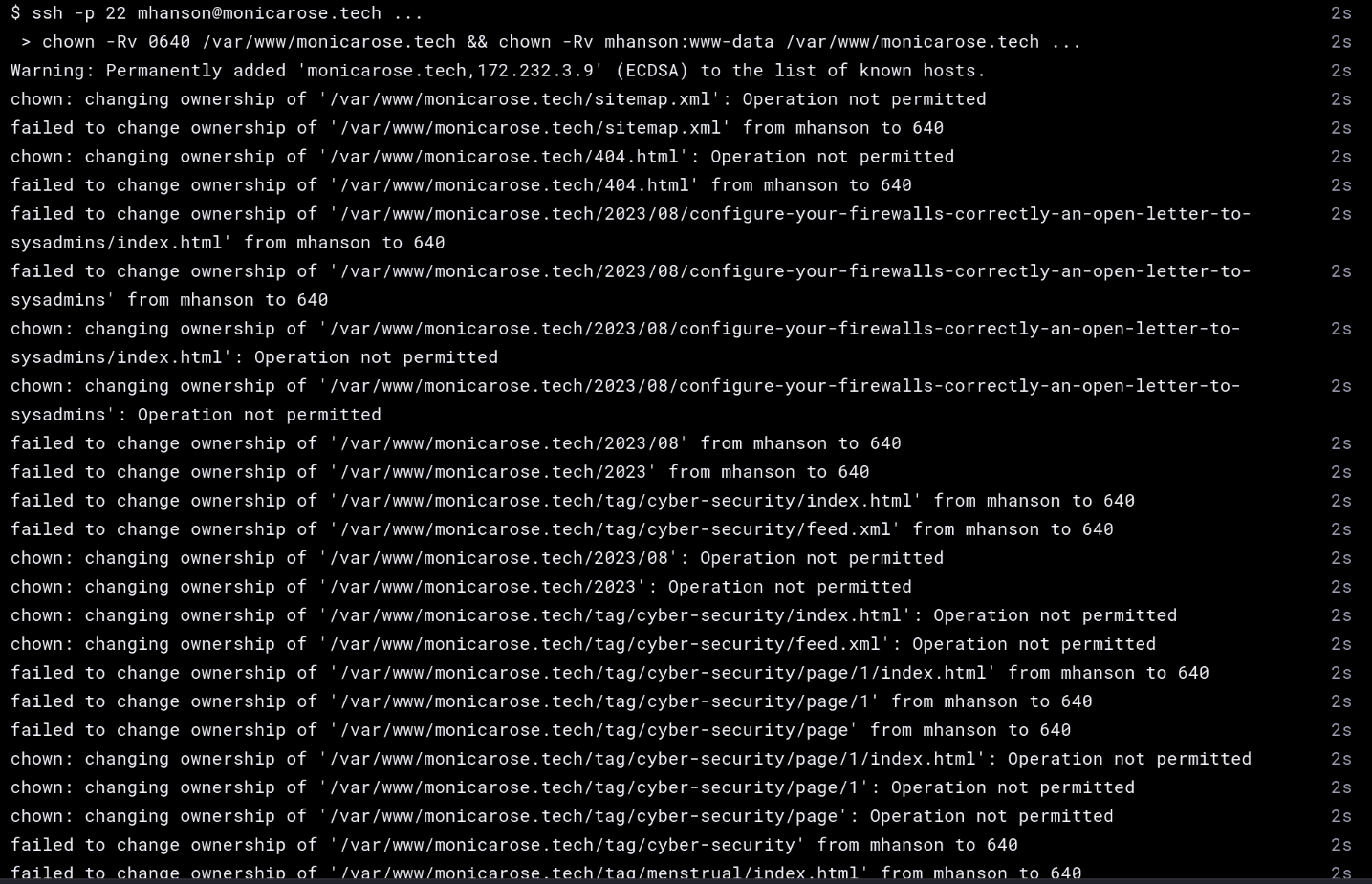

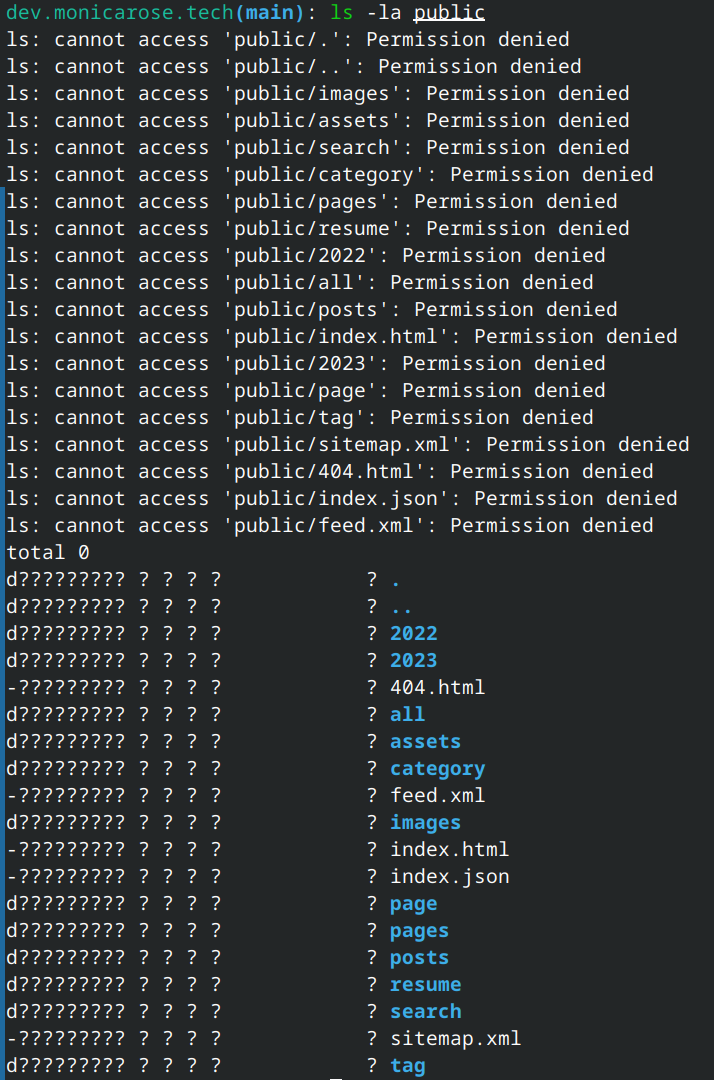

By a show of hands, how many of you caught the error? Still not? Let me throw in the verbose output and the resulted directory listing:

Still don’t see it? Because for about 20 minutes, I didn’t catch it. I feel as though Unix permissions are fairly self explanatory with one exception: directories need to be executable for it to be navigable. The more correct syntax is:

chown -R mhanson:www-data /var/www/monicarose.tech/

find /var/www/monicarose.tech/ -type d -exec chmod 750 {} \;

find /var/www/monicarose.tech/ -type f -exec chmod 640 {} \;

To break down the changes, the last two lines use the find command to set the permissions of directories (The second command, using -type d) and files (The third command, using -type f) independently of each other, allowing the directories to be executable and, as a result, navigable.

Takeaways

Quite frankly, the main takeaway from this migration is to mirror your development environment as much as possible. Permissions and ownership issues would’ve been caught early on if I had taken the time to set up a low privilege user in my development environment. Between that and taking more time with testing locally, I feel as though most of the issues would’ve been caught early on.